Now, in pursuit of Goal A, they've issued a report that is just bonkers wrong. Beyond the usual Just Making Stuff Up part, there is, as Chalkbeat reporter and perennial bad data debunker Matt Barnum pointed out on Twitter, "a total conceptual misunderstanding."

The general theme of the report is "OMG! The learning losses are only terrible awful but it will take forever and a day to recover from them!!" It is absolutely in keeping with the idea that test scores are like stock market prices and not, say, the collected scores of a group of live humans that changes each year.

The subheading signals the dopiness here: "While two decades of math and reading progress have been erased, US states can play an important role in helping students to catch up."

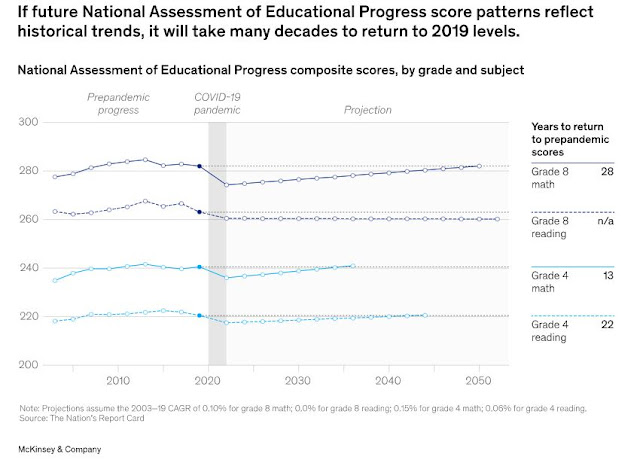

The New York Times used the same damn dumb idea in their headline about NAEP scores, and it still makes no sense. What exactly has been erased, and from where was it erased? And here comes a chart that I'm nominating as The Dumbest Chart Ever Produced By People Who Make More Money Before Lunch Than I Ever Made In A Year.

Let's talk about this for a second. There is an obvious issue, which is that the rates of improvement are made up baloney. "Reflect historic trends"? Do you mean, the kind of improvement we saw after the last time U.S. schools were disrupted by a major pandemic? You can download the full article and still not find an explanation of where these numbers came from, but you can find the old numbers to confirm that no graph of NAEP scores ever looked like this slow steady climb.

But not only are these numbers just made up, but they make no sense.

The chart says that 8th graders won't catch up to pre-pandemic scores for twenty-eight years, which means that students who haven't even been born and so presumably have not been affected by the pandemic will still get low scores because of the pandemic !!??!! How does that even work?

The only possible way this chart could be close to conceptually defensible is if your theory is that the pandemic wiped out everything teachers have learned about how to teach in the last twenty years, and it will somehow take them until 2050 to reacquire that knowledge--though we would of course be talking about teachers who haven't even been born yet!! (Sorry about all the punctuation, but it's all I can do not type this post in italics and caps).

This is not how this works. This is not how any of this works.

There's more in the report that we could pick apart, like the use of the whole "weeks of learning loss" foolishness, a half-assed attempt to correlate learning loss with school closure, and a bunch about how much relief money is still in play, because it wouldn't be a McKinsey report if they weren't trying to market something, and the ultimate conclusion is that districts should really try to fic this Terrible Thing by spending that relief money on The Right Products.

But there's no point in digging deep on the rest because that first chart announces so clearly that the writers of this paper have wandered off down the wrong path. How does this happen? There are four authors, three of whom are supposed to be McKinsey education experts. Of the three, one taught for one whole year for KIPP (then became an education consultant and then went to the Gates Foundation), one put in three years in a DC school (it doesn't say Teach for America, but he did his three went straight to McKinsey), and one has no actual education experience at all).

It will be really unfortunate if any policy makers or leaders actually take this report to heart. This is why folks who actually work in education mistrust "expert consultants," and unfortunately why some teachers distrust their own judgment because surely an internationally respected major consulting firm couldn't be so wrong, could they?

Well, yes, they could. Don't be intimidated.

edit that to March 2020 :) 3 years is bad enough

ReplyDelete